Table of Contents (click to expand)

The brain processes images differently than a camera, which is why pictures of computer screens can look different than the real thing.

I’m going to go ahead and put this picture right here to show you know what I’m talking about:

The picture on the left is a screenshot of a page that I took of my laptop, while the one on the right is a photograph of the same page that I took using my smartphone. Do you notice how the two pictures of the same page look so different?

Go ahead and try it right now. Take a picture of your laptop screen, and it’s highly likely that the photo you take will be covered in weird ‘rainbow patterns’ that aren’t actually visible when you look at the screen (with the naked eye).

The same phenomenon is also observed in movies and videos when they show a TV or computer screen that’s up and running. This is especially true for old CRT screens.

So, what’s going on there? Why do pictures of computer and TV screens look so different than they do in real life?

Recommended Video for you:

The Brain’s Talent For Image Processing

We really need to understand and appreciate how the brain works when our eyes first feed it an image, which the brain then processes and shows shows you, i.e., makes you actually ‘see’ something. In other words, your eyes just transmit the image of a bunch of photons falling on them; it’s the brain that actually processes them and derives some sense from them, allowing you to actually ‘see’ stuff.

When you see a motion picture, the picture itself is not moving; rather, it’s a collection of multiple pictures that appear incredibly fast on the screen, which the brain then smooths out, making you think that something is actually moving on the screen. This is where something known as the frame rate comes in.

Simply put, it’s the number of images that appear on a screen per second. The higher the frame rate, the more convincing the motion looks on the screen.

Almost all Hollywood movies are shot at 24 fps, which means that when you watch a Hollywood movie, you basically see 24 still images projected on the screen in a single second! Action movies and video games have higher frame rates, to make the motion look even smoother!

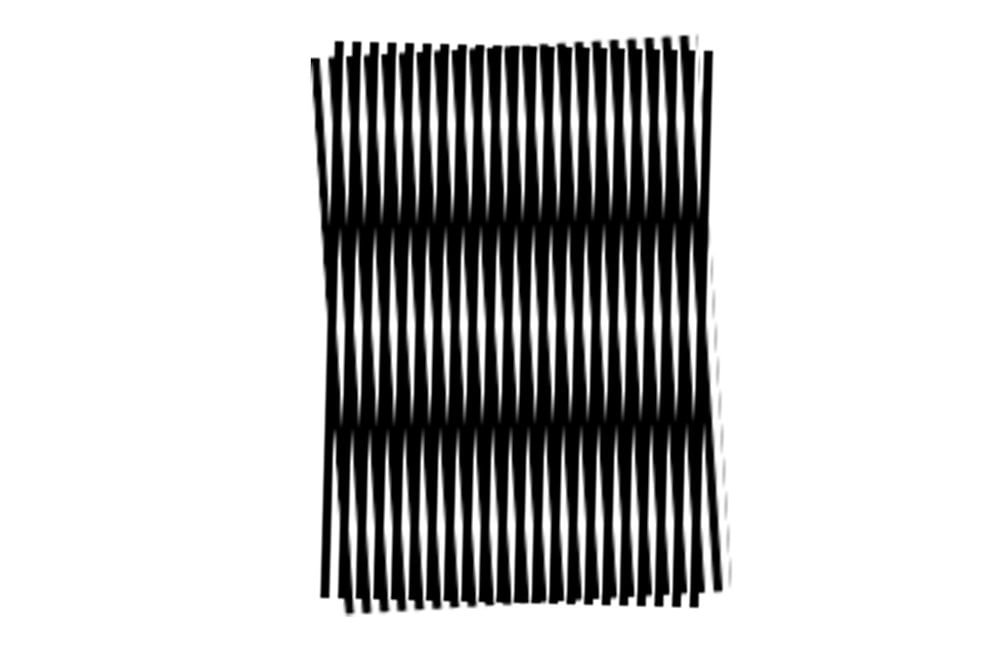

Moire

A moire pattern is an interference pattern that appears when an opaque ruled pattern with transparent gaps is overlaid on another similar pattern. Note that the two patterns shouldn’t be identical.

A picture of a computer screen looks odd because the screen is made of an array of three tiny different-colored dots (red, blue and green), which end up being in similar in size to the red, blue or green samplers in the camera. This results in the formation of a moire pattern, which is why the photograph of a computer/TV screen looks as if it’s filled with arbitrary rainbow patterns (which are not really there on the screen).

Refresh Rate

Another reason behind that weird-looking picture of a computer screen is the refresh rate of the screen. For the uninitiated, the refresh rate refers to the number of frames a digital screen (desktop/laptop monitor, TV etc.) can show per second.

The higher the refresh rate of a screen, the more uniform is the display and the less screen flickering you get. For more detailed information on the refresh rate and how it affects your viewing experience, click here!

A digital screen is refreshed multiple times per second. Our eyes don’t catch this process (because the brain smooths it out to make the screen look consistent), but cameras do. That’s why any picture of a computer screen looks very different from the real thing.

Older screens were updated or ‘refreshed’ by a line, i.e., a scanning line ran the entire breadth of the screen multiple times to create an image on the screen. Of course, the scanning lines worked so fast that the naked eye couldn’t actually see them loading an image. Fun fact: If you have a high-speed camera, you can actually capture the scanning lines that make up an image on the screen.

If you take a picture of a CRT screen, the camera only captures the part of the screen presently lit by the scanning line (whereas the brain does a lot of smoothening to make the screen look completely normal and uniform to us). That’s yet another reason why the picture of a screen looks nothing like the real thing.

References (click to expand)

- Aliasing and Moire patterns. Wake Forest University

- The Moiré EffectAriana Ray, Hastings High SchoolLaser Teaching Center, Stony Brook - laser.physics.sunysb.edu

- (2009). MOIRÉ PATTERNS FROM A CCD CAMERA - Are They Annoying Artifacts or Can They be Useful?. Proceedings of the Fourth International Conference on Computer Vision Theory and Applications. SciTePress - Science and and Technology Publications.

- WC Wang. Moiré Method - University of Washington. The University of Washington